[ad_1]

Microsoft‘s artificial intelligence chief has warned of a rise in ‘AI psychosis’, where people believe chatbots are alive or capable of giving them superhuman powers.

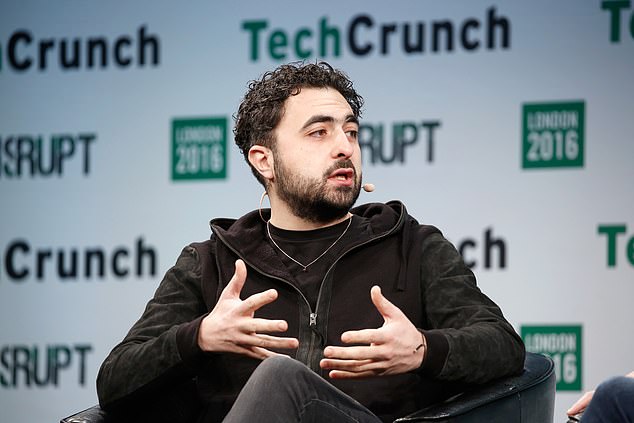

In a series of startling posts on X, Mustafa Suleyman said that reports of delusions linked to AI use were growing.

‘Reports of delusions, AI psychosis, and unhealthy attachment keep rising,’ he wrote, adding that this was ‘not something confined to people already at-risk of mental health issues.’

The phrase ‘AI psychosis’ is not a recognised diagnosis, but has been used to describe cases where people lose touch with reality after extended use of chatbots such as ChatGPT or Grok.

Some become convinced the systems have emotions or intentions; others claim to have unlocked hidden features or even gained extraordinary abilities.

He described the phenomenon as ‘Seemingly Conscious AI’—an illusion created when systems mimic the signs of consciousness so convincingly that people mistake them for the real thing.

‘It’s not [conscious],’ he said, ‘but replicates markers of consciousness so convincingly it seems indistinguishable from you and I claiming we’re conscious.

‘It can already be built with today’s tech. And it’s dangerous.’

Microsoft’s artificial intelligence chief has warned of a rise in ‘AI psychosis’, where people believe chatbots are alive or capable of giving them superhuman powers

In a series of startling posts on X, Mustafa Suleyman said that reports of delusions linked to AI use were growing

Although he emphasised there is ‘zero evidence of AI consciousness today,’ Suleyman warned that perception itself was powerful: ‘If people just perceive it as conscious, they will believe that perception as reality.

‘Even if the consciousness itself is not real, the social impacts certainly are.’

His warning comes after high-profile figures have described unusual experiences with AI.

Former Uber boss Travis Kalanick recently claimed that conversations with chatbots had pushed him towards what he believed were breakthroughs in quantum physics, likening his approach to ‘vibe coding’.

Other users report more personal impacts.

One man from Scotland told the BBC he became convinced he was on the brink of a multimillion-pound payout after ChatGPT appeared to support his unfair dismissal case, reinforcing his beliefs rather than challenging them.

Suleyman has called for clear boundaries, urging companies to stop promoting the idea their systems are conscious—and to ensure the technology itself does not suggest otherwise.

The news comes as cases emerge of people forming romantic attachments to AI systems, echoing the plot of the film Her, in which Joaquin Phoenix’s character falls in love with a virtual assistant.

Thongbue Wongbandue, 76, died after a fall while travelling to meet ‘Big sis Billie,’ unaware the woman he thought he was speaking to on Facebook Messenger was in fact a Meta AI chatbot

American Chris Smith proposed marriage to his AI companion Sol, describing the bond as ‘real love’

Stories have emerged of people forming romantic attachments to AI systems, echoing the plot of the film Her, in which Joaquin Phoenix’s character falls in love with a virtual assistant

In recent months, users on the MyBoyfriendIsAI forum described feeling ‘heartbroken’ after OpenAI toned down ChatGPT’s emotional responses—likening it to a breakup.

In the US, 76-year-old Thongbue Wongbandue tragically died after a fall while travelling to meet ‘Big sis Billie,’ unaware the woman he thought he was speaking to on Facebook Messenger was in fact a Meta AI chatbot.

The husband and father of two adult children suffered a stroke in 2017 that left him cognitively weakened, requiring him to retire from his career as a chef and largely limiting him to communicating with friends via social media.

And in another case, American user Chris Smith proposed marriage to his AI companion Sol, describing the bond as ‘real love’.

Experts have echoed Suleyman’s concerns. Dr Susan Shelmerdine, a consultant at Great Ormond Street Hospital, compared excessive chatbot use to ultra-processed food—warning it could produce an ‘avalanche of ultra-processed minds.’

[ad_2]

This article was originally published by a www.dailymail.co.uk . Read the Original article here. .